Re-Structure Ahead in Big Data & Spark

Yaron Haviv | March 16, 2016

Big Data used to be about storing unstructured data in its raw form – . “Forget about structures and Schema, it will be defined when we read the data”. Big Data has evolved since – The need for Real-Time performance, Data Governance and Higher efficiency is forcing back some structure and context.

Traditional Databases have well defined schemas which describe the content and the strict relations between the data elements. This made things extremely complex and rigid. Big Data initial application was analyzing unstructured machine log files so rigid schema was impractical. It then expanded to CSV or Json files with data extracted (ETL) from different data sources. All were processed in an offline batch manner where latency wasn’t critical.

Big Data is now taking place at the forefront of the business and is used in real-time decision support systems, online customer engagement, and interactive data analysis with users expecting immediate results. Reducing Time to Insight and moving from batch to real-time is becoming the most critical requirement. Unfortunately, when data is stored as inflated and unstructured text, queries take forever and consume significant CPU, Network, and Storage resources.

Big Data today needs to serve many use cases, users, and large variety in content, data must be accessible and organized for it to be used efficiently. Unfortunately traditional “Data Preparation” processes are slow and manual and don’t scale, data sets are partial and inaccurate, and dumped to the lake without context.

As the focus on data security is growing, we need to control who can access the data and when. When data is unorganized there is no way for us to know if files contain sensitive data, and we cannot block access to individual records or fields/columns.

Structured Data to the rescue

To address the performance and the data wrangling challenge , new file formats like Parquet and ORC were developed. Those are highly efficient compressed and binary data structures with flexible schema. It is now the norm to use Parquet with Hive or Spark since it enables much faster data scanning and allows reading only the specific columns which are relevant to the query as opposed to going over the entire file.

Using Parquet, one can save up to 80% of storage capacity comparing to a text format while accelerating queries by 2-3x.

The new formats force us to define some structure upfront, with the option to expand or modify the schema dynamically unlike older legacy databases. Having such schema and metadata helps in reducing data errors, and makes it possible for different users to understand the content of the data and collaborate. With built-in metadata it becomes much simpler to secure and govern the data and filter or anonymize parts of it.

One challenge with the current Hadoop file based approach regardless if it is unstructured or structured data, is that updating individual records is impossible, and it is constrained to bulk data uploads. This means that dynamic and online applications will be forced to rewrite an entire file just to modify a single field. When reading an individual record, we still need to run full scans instead of selective random reads or updates. This is also true for what may seem to be sequential data, e.g. delayed time series data or historical data adjustments.

Spark moving to structured data

Apache Spark is the fastest growing analytics platform and can replace many older Hadoop based frameworks, it is constantly evolving and trying to address the demand for interactive queries on large datasets, real-time stream processing, graphs and machine learning. Spark has changed dramatically with the introduction of “Data Frames”, in-memory table constructs that are manipulated in parallel using machine optimized low-level processing (see project Tungsten). DataFrames are structured and can be mapped directly to variety of Data Sources via a pluggable API, this include:

- Files such as: Parquet, ORC, Avro, Json, CSV, etc.

- Databases such as: MongoDB, Cassandra, MySQL, Oracle, HP Vertica, etc.

- Cloud Storage like Amazon S3 and DynamoDB

DataFrames can be loaded directly from external databases, or be created from unstructured data by crawling and parsing the text (a long and CPU/Disk intensive task). DataFrames can be written back to external data sources and it can be done in a random and indexed fashion if the backend support such operation (e.g. in the case of a Database).

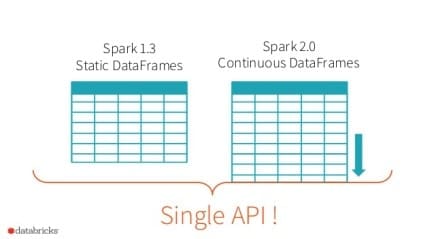

Spark 2.0 release adds “Structured Streaming “, expanding the use of DataFrames from batch and SQL to streaming and real-time. This will greatly simplify the data manipulation task and speed up performance – Now we can use streaming, SQL, Machine-Learning, and Graph processing semantics over the same data.

Spark is not the only streaming engine moving to structured data, Apache Druid delivers high performance and efficiency by working with structured data and columnar compression.

Summary

New applications are designed to process data as it gets ingested and react in seconds or less instead of waiting for hours or days. The Internet of Things (IoT) will drive huge volumes of data which in some cases may need to be processed immediately to save or improve our lives. The only way to process such high volumes of data while lowering the time to insight is to normalize, clean, and organize the data as it lands in the Data Lake and store it in highly efficient dynamic structures. When analyzing massive amounts of data, we better run over structured and pre-indexed data. This will be faster in orders of magnitudes.

With SSDs and Flash at our disposal there is no reason to re-write an entire file just to update individual fields or records – We’d better harness structured data and only modify the impacted pages.

At the center of this revolution we have Spark and DataFrames. After years of investment in Hadoop some of its projects are becoming superfluous and are displaced by faster and simpler Spark based applications. Spark engineers made the right choice and opened it up to a large variety of external data sources instead of sticking to the Hadoop’s approach and forcing us to copy all the data into a crippled and low-performing file-system … yes I’m talking about HDFS.