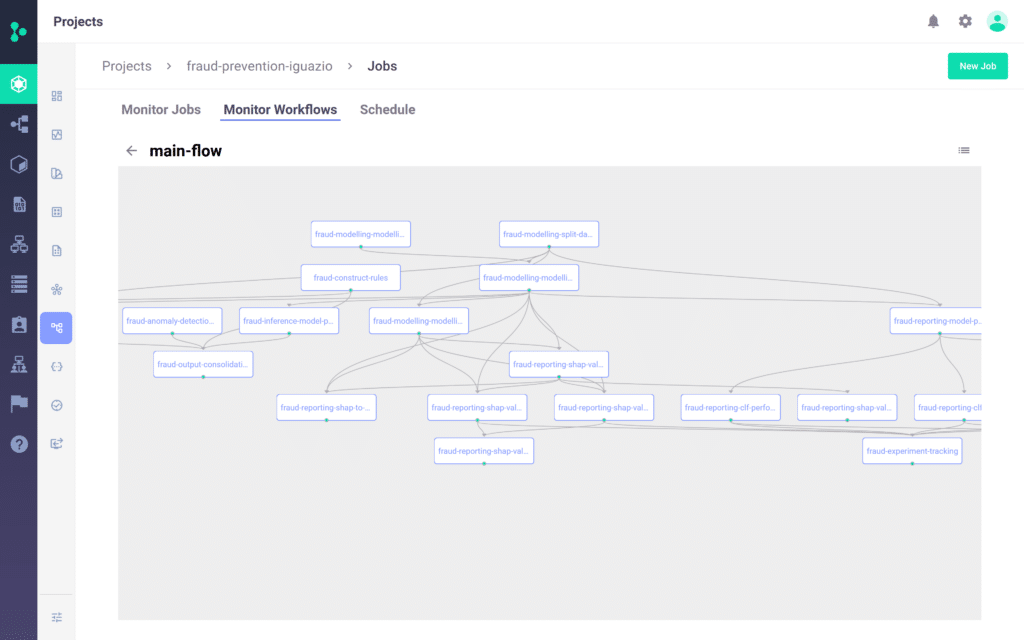

Automated and Continuous

How to continuously roll out AI services with CI/CD for ML and a production-first approach to MLOps

Thank you for downloading this whitepaper

The PDF has been sent to your email. If you have questions feel free to reach out to info@iguazio.com