Handling Large Datasets in Data Preparation & ML Training Using MLOps

Alexandra Quinn | January 18, 2021

Operationalizing ML remains the biggest challenge in bringing AI into business environments

Data science has become an important capability for enterprises looking to solve complex, real-world problems, and generate operational models that deliver business value across all domains. More and more businesses are investing in ML capabilities, putting together data science teams to develop innovative, predictive models that provide the enterprise with a competitive edge — be it providing better customer service or optimizing logistics and maintenance of systems or machinery.

While the development of autoML tools and on cloud platforms, along with the availability of inexpensive on-demand compute resources is making it easier for businesses to become ML-driven, and lowering the barrier to AI adoption, effectively developing production-ready ML requires data scientists to imbue operational ML pipelines. Along with this challenge, enterprises are finding it challenging to work with really large datasets which are essential to creating accurate models in complex environments.

To operationalize any piece of code in data science and analytics and get it to production, data scientists need to go through four major phases:

- Exploratory data analysis

- Feature engineering

- Training, testing and evaluating

- Versioning and monitoring

While each of the above phases can be complex and time consuming, the major challenge with productionizing ML models doesn’t end here. The real challenge lies in building, deploying and continuously operating a multi-step pipeline with the ability to automatically retrain, revalidate and redeploy models at scale. As such, data scientists need a way to effortlessly scale up their work and get them from notebooks onto cloud platforms (especially when working with large datasets) to achieve enterprise AI at scale.

This is the key to achieving an integrated ML pipeline that delivers value in real-world applications. What’s more, such a pipeline must have the ability to automatically handle large multivariate data (with constantly evolving data profiles) at low latency.

Constructing such pipelines is a very daunting task. However, there are a couple of open-source tools available for working with data at scale in a simple and reproducible way.

To learn more about handling large data sets in a simple and efficient way with MLOps best practices, watch this on-demand MLOps Live session here.

Working with Data at Scale

Each phase of building an integrated ML pipeline (as well as the data science lifecycle) typically requires different frameworks and tools. This is particularly true for mechanisms relating to development and training versus those for operationalizing and productionizing. There are a variety of open-source frameworks, tools and APIs for manipulating and managing multivariate data.

However, these tools don’t scale suitably with growing volumes of data in terms of memory usage and processing time. Consequently, a lot of data scientists prefer to use distributed computing tools. However, this complicates development and production processes since data scientists need to retool workflows while moving back and forth between a Python ecosystem and a JVM world.

Also, there are a lot of instances in which data scientists need to work with a sizeable collection of data that’s too large to fit into their laptop or local machine’s memory. While it’s possible to use native Python file streaming and other tools to iterate through the dataset without loading it into memory, this will lead to speed limitations since jobs are running on a single thread.

On one hand, you can speed things up with parallelization…however, it can be incredibly difficult to get it right, and even so, you’re still limited by the resources in your local machine.

Leveraging Distributed Computing for Large-Scale Data Processing Within ML Pipelines

Distributed computing is the perfect solution to this dilemma. It distributes tasks to multiple independent worker machines, each of which handles chunks of the dataset in its own memory and dedicated processor. This allows data scientists to scale code on very large datasets to run in parallel on any number of workers. This goes beyond parallelization where jobs are executed in multiple processes or in threads on a single machine.

While Spark remains a popular tool for transitioning to a distributed compute environment, it comes with several limitations; it’s relatively old, a bit tricky to work with (when prototyping on local machines), and limited in its capability to handle certain tasks like large multi-dimensional vector operations or first-class machine learning. On the plus side, it’s ideal for data scientists who prefer a more SQL-oriented approach for working with enterprises with JVM infrastructure and legacy systems.

Fortunately, the availability of a Python-based data processing framework like Dask means data scientists don’t need to reinvent the wheel or work with tools like Spark (if they prefer not to).

Dask combines the flexibility of Python development for data science with the power of distributed computing. It ensures seamless integration with common Python data tools, allowing data scientists to use the same native Python code at scale, without the need to learn other technologies. This reduces the learning curve for data scientists forced to work with non Python-based data processing frameworks.

Dask is the go-to large-scale data processing tool for data scientists:

- who prefer Python or native code

- who have large legacy code bases that they don’t want to rewrite

- who work with complex use cases or applications that do not neatly fit the Spark computing model

- who prefer a lighter-weight transition from local computing to cluster computing

- who want to easily interoperate with other technologies

Dask isn’t really a data tool; it’s more of a distributed task scheduler. It orchestrates delayed objects together with dependencies into an execution graph, making it the ideal processing framework for highly sophisticated tasks.

The scheduler manages the states of each workload, splits and sends jobs to the different machines available, computes the tasks individually, stores and then serves the computed results back to you. Also, it can run on a single local machine as well as (with minimal tweaks to the code) scale up to a thousand-worker cluster use case.

Enterprises can simplify tedious ML operations by leveraging Dask’s auto-scaling and parallelism capabilities, enabling their data scientists to process the same tasks at a fraction of the time, while cutting infrastructure costs through the on-demand allocation of server and GPU resources. On Dask, it’s easy to move from a Python function and parallelize execution on multiple workers on a distributed system…which isn’t easy to accomplish in Spark and other frameworks.

This brings a lot of velocity to data science teams, especially since Dask removes the need to default to data engineers when it’s time to scale up workloads.

This demo shows a simple task, demonstrating that Dask is about five times faster than Spark in processing large workloads.

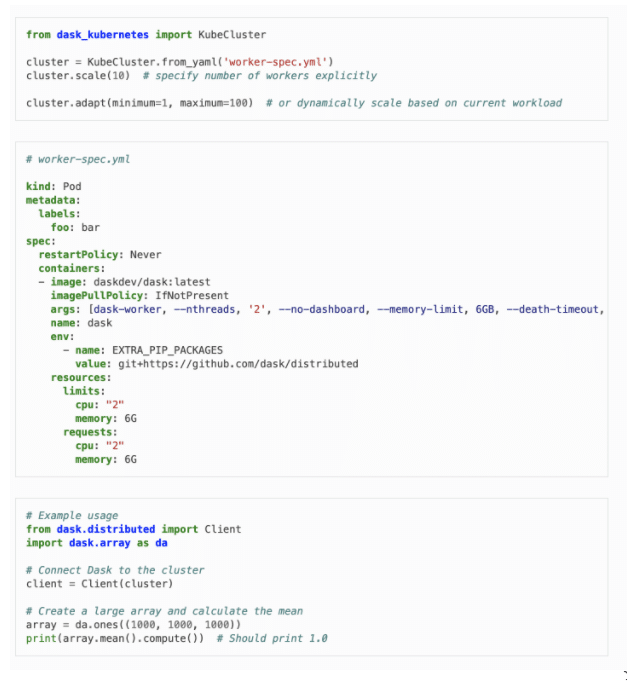

Running Dask over Kubernetes

While it’s easy to deploy Dask on a single machine, scaling it on a cluster (to improve resource utilization) is a bit trickier. The best way for data scientists to do this is by leveraging Kubernetes to scale beyond a single machine onto a cluster and run Dask in a distributed and elastic way.

Using Dask on Kubernetes gives data scientists the option to scale manually or create auto-scaling rules within the Kubernetes configuration. This improves resource utilization and delivers tangible cost efficiency (since too many servers lying around doing nothing really drives up cost).

Also, it provides a high-speed, shared data layer for seamless scaling as well as some level of abstraction, enabling data scientists to configure workflows in a way that makes the infrastructure side of things completely transparent to the user.

Dask on Kubernetes provides cluster managers for Kubernetes. KubeCluster deploys Dask workers on Kubernetes clusters using native Kubernetes APIs. It is designed to dynamically launch short-lived deployments of workers during the lifetime of a Python process.

Currently, it’s designed to be run from a pod on a Kubernetes cluster that has permissions to launch other pods. However, it can also work with a remote Kubernetes cluster (configured via a kubeconfig file), as long as it is possible to interact with the Kubernetes API and access services on the cluster.

Here’s a quick-start setup example:

Best Practices

- Your worker pod image should have a similar environment to your local environment, including versions of Python, Dask, Cloudpickle, and any libraries that you may wish to use (like NumPy, Pandas, or Scikit-Learn). See the dask_kubernetes.KubeCluster docstring for guidance on how to check and modify this.

- Your Kubernetes resource limits and requests should match the

--memory-limitand--nthreadsparameters given to thedask-workercommand. Otherwise your workers may get killed by Kubernetes as they pack into the same node and overwhelm that nodes’ available memory, leading toKilledWorkererrors. - We recommend adding the

--death-timeout, '60'arguments and therestartPolicy: Neverattribute to your worker specification. This ensures that these pods will clean themselves up if your Python process disappears unexpectedly.

One of the disadvantages of running Dask over Kubernetes is that there aren’t any processes for managing the lifecycle of containers. This is where MLRun can be a great solution. MLRun, an open source project by Iguazio, is a framework with a runtime element that enables Spark or Dask workloads to look like serverless jobs.

With MLRun, data scientists can write code once and build a full pipeline that combines model objects or model files from Dask, Spark, or other engines and automatically convert them into a real-time serving pipeline. MLRun automates the building of containers, configurations, and packages, and other resources…complete with server and automated lifecycle management.

You can see an example of how to use MLRun with Dask here.

Combining Dask with Kubernetes and MLRun enables you to scale data preparation and training while maximizing performance at any scale.