6 Best Practices for Implementing Generative AI

Alexandra Quinn | January 14, 2025

Generative AI has rapidly transformed industries by enabling advanced automation, personalized experiences and groundbreaking innovations. However, implementing these powerful tools requires a production-first approach. This will maximize business value while mitigating risks.

This guide outlines six best practices to ensure your generative AI initiatives are effective: valuable, scalable, compliant and future-proof. From designing efficient pipelines to leveraging cutting-edge customization techniques, we’ll walk through the steps that will help your organization harness the full power of generative AI.

1. Create Modular Pipelines to Orchestrate Operationalization from End-to-End

AI pipelines ensure that each step of the AI lifecycle—across data management, development, deployment and monitoring—is automated and optimized for performance. This is particularly important in large-scale gen AI projects, which handle massive datasets and complex models.

Streamlining the entire process saves engineering resources, reduces operational bottlenecks and fosters collaboration across teams, from data scientists to DevOps. This ensures models stay accurate and reliable over time.

Here’s our suggestion for four pipelines that will bring your models from the lab to production.

- Data management - Ensuring data quality through data ingestion, transformation, cleansing, versioning, tagging, labeling, indexing, and more.

- Development - High quality model training, fine-tuning or prompt tuning, validation and deployment with CI/CD for ML.

- Application - Bringing business value to live applications through a real-time application pipeline that handles requests, data, model and validations.

- LiveOps - Improving performance, reducing risks and ensuring continuous operations by monitoring data and models for feedback.

2. De-Risk Models and Establish AI Governance and Compliance

Operationalizing LLMs comes with unique risks, like hallucinations, toxicity and bias. In addition, enterprises deploying new projects and capabilities need to mitigate risks associated with data privacy, security and compliance.

Establishing guardrails throughout data management, model development, application deployment and LiveOps pipelines helps ensure:

- Fair and unbiased outputs

- IP protection

- PII elimination

- Improved LLM accuracy and performance

- Minimal AI hallucinations

- Filtering of offensive or harmful content

- Alignment with legal and regulatory standards

- Ethical use of LLMs

3. Customize Your LLMs

LLM customization is the process of tailoring or adjusting an LLM to better suit specific use cases or applications. Rather than relying solely on pre-trained models, customization allows organizations to adapt models using their proprietary data. This improves the model's relevance, accuracy, and performance to specific business needs. For example, by ensuring a customer-facing LLM’s output is on brand with the organization’s tone, voice and messaging.

LLM customization techniques include:

- RAG (Retrieval-Augmented Generation) - Incorporating external, real-world and dynamic data sources that were not part of the model’s initial training.

- Fine-tuning – Further training the model on more specific datasets relevant to a particular domain or industry. Check out this demo of fine-tuning a gen AI chatbot.

- RAFT (Retrieval Augmented Fine-Tuning) - A combination of RAG and fine-tuning. This involves integrating external knowledge sources during the fine-tuning stage of a language model.

- Prompt Engineering – Using LLM prompts or input formats like templates and guidelines that guide the model to produce desired outputs.

- Agents – Using Conversational Retrieval Agents and vector stores to provide tailored answers to user queries.

- Performance Optimization – Enhancing the model’s efficiency and accuracy in handling specific tasks through parameter tuning, architecture adjustments, or deployment optimizations.

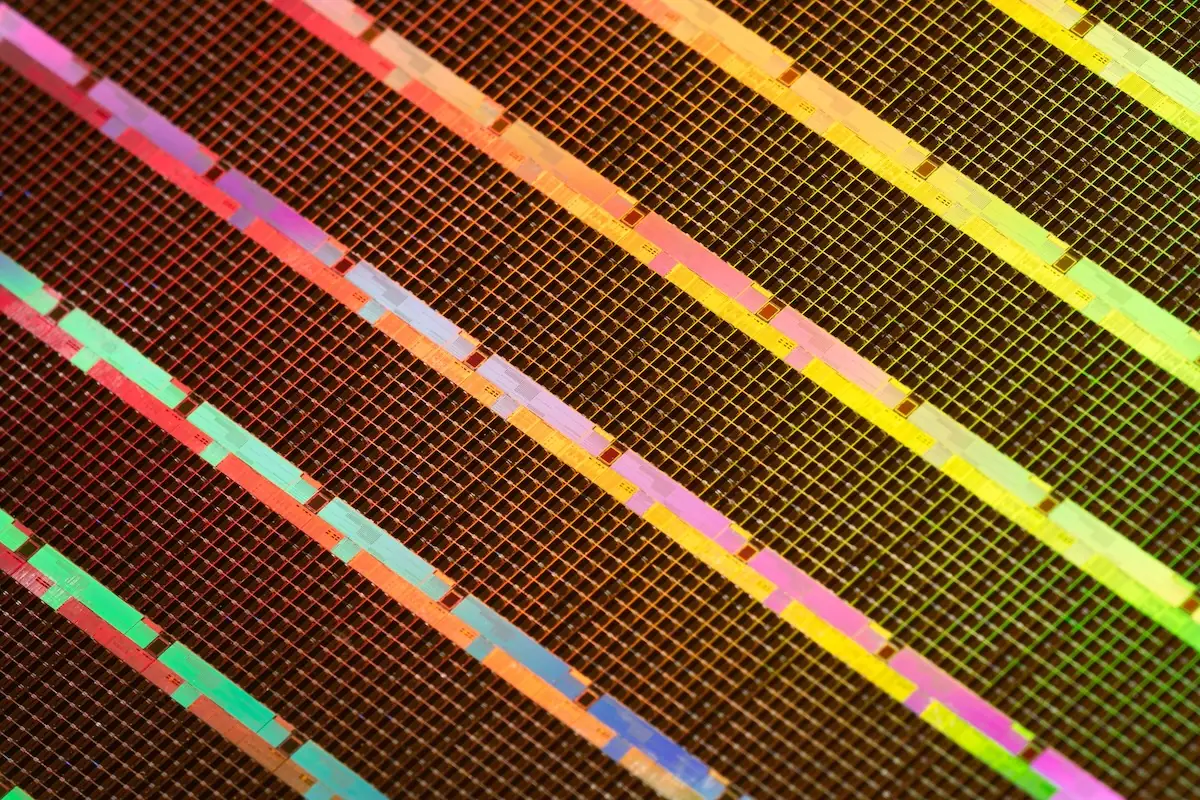

4. Manage Resources Efficiently - GPU Provisioning

Organizations need GPUs to be able to process large amounts of data simultaneously, speed up computational tasks and handle specific applications like AI, data visualization and more. This need is likely to grow as the demand for computational power and real-time processing increases across industries.

However, GPUs are scarce and expensive. Luckily, they are also commonly under-utilized due to inefficient resource allocation, data bottlenecks, complicated DevOps and limited support for use-cases beyond deep learning.

GPU provisioning is an effective way to scale in a resource-efficient way. This includes:

- Assigning GPUs to training engines or notebooks

- Dynamically scaling up on-demand

- Dynamically scaling down on-demand all the way down to zero

Read more about GPU provisioning with Iguazio here.

5. Ensure Flexible Deployment Options

Different organizations require different types of gen AI infrastructure, based on varying workloads and operational demands. Cloud platforms provide scalability, on-premises address strict latency, data sovereignty, or security requirements, and hybrid deployments allow businesses to combine these benefits, offering the agility of cloud services with the control and customization of on-prem infrastructure.

For example, in industries like finance, regulatory mandates often dictate that sensitive data be stored and processed on-premises, while less critical workloads can be offloaded to the cloud to reduce cost.

For successful flexible deployment, organizations need robust orchestration tools that can manage workloads across environments seamlessly. Ensure capabilities like automation, network optimization, and strong security protocols are supported across diverse infrastructure setups.

6. Future-proof Your Architecture

Future-proofing your architecture ensures long-term scalability, adaptability and cost-efficiency. One key strategy to do so is adopting open-source technologies in your design. Open-source tools ensure that you aren't locked into a proprietary solution, allowing for easier integration with other platforms and future innovations. Additionally, open code allows continuously updating and improving these tools, ensuring that your infrastructure stays current with the latest advancements in the field.

Examples of open-source tools for gen AI include LangChain, LLaMA, Falcon, BLOOM, Mistral, ModelScope, StarCoder, WhisperAI, Hugging Face's multimodal models, TensorFlow, PyTorch, MLRun, and many others.

Conclusion

Generative AI is reshaping how businesses operate, innovate and engage with their audiences. By following these six best practices organizations can unlock the full potential of generative AI while minimizing risks. Ready to get started to tackle the challenges of tomorrow?