How to Manage Thousands of Real-Time Models in Production

Gilad Shapira | April 28, 2025

Two years after Seagate first shared their AI and MLOps success story, the data storage leader is now revealing how far they've come since then. In this blog post, you’ll see how the team manages thousands of AI models in production with only a few team members. This is thanks to their AI factory, whichdoes the heavy lifting of automated processes like monitoring, testing, mocking and more.

You can hear more details in the webinar this article is based on, straight from Kaegan Casey, AI/ML Solutions Architect at Seagate.

How Seagate overcame their AI challenges with an AI Factory

Iguazio’s AI factory has helped the Seagate team overcome multiple common MLOps challenges:

- Project POC to Deployment Gap - The gap between experimentation and deployment is often created due to differing environments (e.g from local or virtual machine to K8s cluster) and the need for bespoke deployments. These variations result in slow feedback loops and delays reaching production. Iguazio allows the team to go from testing code locally to running at scale on a remote cluster within minutes.

- Visibility - Many teams experience fragmented experiment tracking and lack of collaboration and project visibility. Iguazio allows sharing projects between diverse teams, provides detailed logging of parameters, metrics, and ML artifacts and allows for artifact versioning including labels, tags, associated data etc.

- Integrations - The team dealt with diverse factory inputs/outputs for real-time or batch inference data due to legacy requirements. Nuclio function triggers (HTTPS, RMQ, Kafka, etc.) and MLRun function customizability addressed this gap. Both Nuclio and MLRun are open-source tools contributed and maintained by Iguazio.

- Scalability - Deploying a large number of pretrained models into memory is challenging, and so is managing large batches of training models. Iguazio allows on-demand and customizable serving even for thousands of small models. It also supports parallel model training.

- Flexibility - The team needed support for a bespoke architecture and diverse data types. Iguazio allowed for diverse stream trigger options, the ability to route requests to multiple models simultaneously, customizable model serving functions and more.

- Deployment - Different organizations need to deploy on different types of infrastructure. Iguazio can be deployed on a diverse set of infrastructure. This includes on-premises, cloud and hybrid models.

- Monitoring - Many existing monitoring tools and applications require setup per use case. Iguazio allows standardized application logs and metric outputs so all applications can benefit from Prometheus and Grafana monitoring and log forwarding out of the box.

- Vendor Support - Iguazio proved to be a highly responsive support team collaborating with our infra team to ensure high availability of the entire system, issue resolution and solution design.

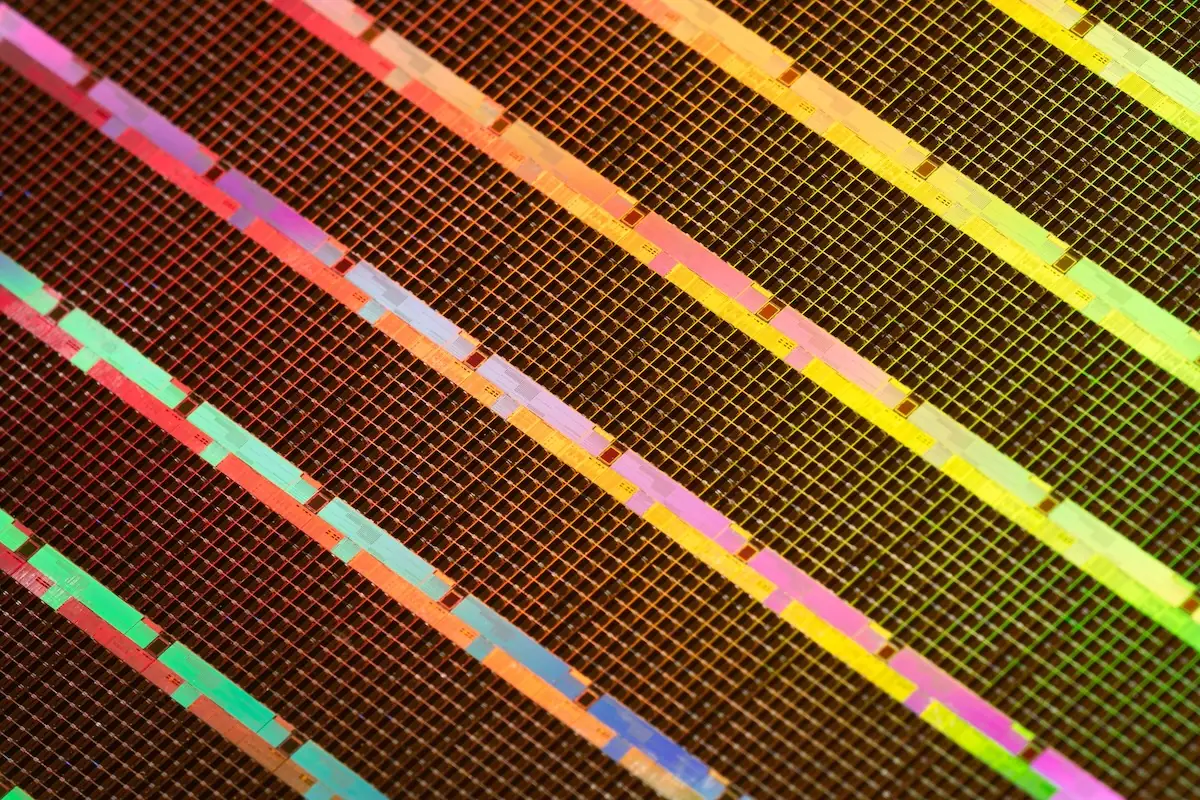

What are Seagate's Wafer Factories?

Seagate used Iguazio to help with its wafer factories and wafer manufacturing process, making them both more efficient.

Before we continue, here’s a brief explanation of the terms used:

- Hard Drive - A hard drive is made up of many precision parts. The head and the disk are two of the most important. Semiconductor-type wafer processing methods allow for economical manufacturing of heads that read and write data onto the disk.

- Wafer - A wafer is a circular ceramic disk that is made up of many layers of materials. A completed wafer is 200mm (8") in diameter and 1mm thick (about the thickness of a penny).

Why This Matters:

Seagate can build over 100,000 recording heads from one completed wafer. However, wafer creation is very complex. The raw wafer is a ceramic substrate upon which materials are sequentially layered. The thickness of each layer is controlled with exacting precision. The layering process can include more than 2800 steps that form patterns of electrical conductors and magnetic material on the wafer. A single wafer can take anywhere from 4-8 months to complete. Therefore, the room for error is small. It’s a significant investment in time and money if a wafer is unusable.

Project #1: Unsupervised Tool Health Monitoring at Scale

Seagate has 24+ groups of semiconductor manufacturing tools running 24/7. When processing wafers or other materials, these tools use IoT sensors to measure parameters like current, voltage and pressure every two seconds. Each tool generates tens of millions of data samples daily that need to be monitored.

Despite the massive scale, the project is staffed with only two data scientists/MLOps engineers, one application developer, and dozens of equipment and process engineers who interpret alerts and maintain operational integrity and health.

Opportunities for Value Realization Through an AI Factory

- Cost reduction through scrap avoidance

- Scheduled maintenance planning

- Increased sensor coverage and control

- New insights

- Metrology skip rate increase

- Funneled investigation to fails

- Cost of storage/compute

Seagate’s AI Factory Architecture

The AI factory is completely automated. The only manual step in the process is the initial model configuration, which serves as a blueprint for what to monitor and with which models. This setup happens once per toolset and is stored in a database. It takes about a week and can be fine-tuned over time.

The system then automatically generates model configurations, determines if enough data exists to train, whether retraining is needed, if models are available for inference and the current status of each model. New configurations are added as factory processes evolve.

Two types of algorithms are used: one pre-trained and one trained live using a rolling time window frame. MLRun handles the training pipeline using Kubeflow, allowing for massive parallel training depending on compute capacity (e.g., training thousands of models concurrently using GPU).

After training, models are marked available for inference and loaded on-demand instead of staying in memory, supporting scalability Incoming data (e.g., wafer processing data) is streamed via RabbitMQ → V3IO (a Kafka-like internal stream), enriched with relevant model info.

Each Nuclio function loads the appropriate model from Iguazio, performs inference, and sends results to a database or stream, or back to RabbitMQ for factory control actions.

Final results are aggregated and presented in dashboards, which serve as the main interaction point for monitoring outcomes.

From the user’s perspective, developers can work locally in their preferred environments (VMs or laptops), using small data samples for debugging and testing. When ready, they can scale seamlessly to a production cluster using orchestration batch scripts. CI/CD is supported by mapping to the Git repository.

This workflow supports a monolithic style of project development; the system is centered around a shared codebase, with modular applications that have individual requirements, all organized in a single Git repository.

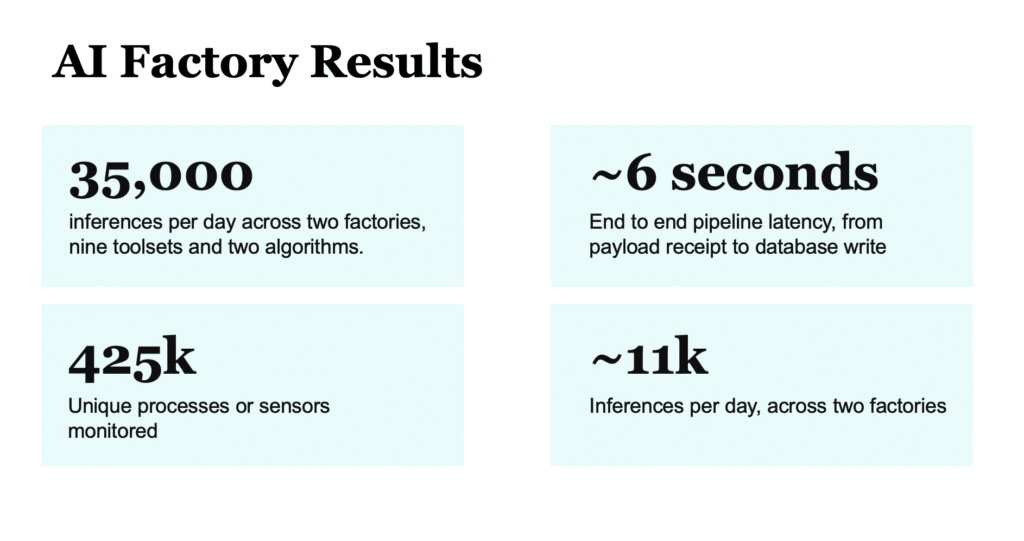

AI Factory Results

The team has built a highly flexible, scalable, and extendable ML development environment using Iguazio.

The impact:

- 35,000 inferences per day across two factories, nine toolsets and two algorithms.

- Monitoring 425,000 unique processes or sensors.

- Reducing the number of pretrained models from 12,000+ to 3000+.

Project #2: Decreasing Production Horizon for Wafer Lifecycle

With tool capacity becoming the bottleneck (see project #1) the team implemented smart sampling, which is used to determine if a commodity should be measured or not. The goal is to maximize the utilization of high-cost manufacturing tools, potentially avoiding the need for additional equipment purchases and unlocking significant cost savings.

Seagate’s AI Factory Architecture

The architecture is similar to the architecture of the previous project. However, the entire pipeline is structured as a Directed Acyclic Graph (DAG).

The first step is a common pre-processing phase for all models. Parallel routing of incoming messages takes place to multiple models at the same time. In the end, inference results are consolidated and written to a database.

AI Factory Results

- End-to-end pipeline latency is ~6 seconds, from payload receipt to database write.

- The system supports ~11,000 inferences per day across two factories.

- 4,000+ models are deployed in memory simultaneously (as opposed to on-demand serving).

The Future: MLOps and AI Democratization Across Seagate

The team at Seagate is continuously and actively working on improving onboarding, collaboration and education to make adopting AI and ML more effective and scalable. Future endeavors include:

- Onboarding new practitioners

- Supporting and scaling new and existing ML projects

- Enhancing reporting and automation

- Integrating with LLMs and generative AI capabilities

- And more

To see a demo of how the system works and for more explanations about each project, watch the webinar.