Building Scalable Gen AI Apps with Iguazio and MongoDB

Wei You Pan , Ashwin Gangadhar , Yaron Haviv and Zeev Rispler | July 22, 2024

AI and generative Al can lead to major enterprise advancements and productivity gains. By offering new capabilities, they open up opportunities for enhancing customer engagement, content creation, virtual experts, process automation and optimization, and more.

According to McKinsey & Company, gen Al has the potential to deliver an additional $200-340B in value for the banking industry. One popular gen AI use case is customer service and personalization. Gen AI chatbots have quickly transformed the way that customers interact with organizations. They can handle customer inquiries and provide personalized recommendations while empathizing with them and offering nuanced support that is tailored to the customer’s individual needs. Another less obvious use case is fraud detection and prevention. AI offers a transformative approach by automating the interpretation of regulations, supporting data cleansing, and enhancing the efficacy of surveillance systems. AI-powered systems can analyze transactions in real-time and flag suspicious activities more accurately, which helps institutions take informed actions to prevent financial losses.

In this blog post, we introduce the joint MongoDB-Iguazio gen AI solution, which allows for the development and deployment of resilient and scalable gen AI applications. Before diving into how it works and its value for you, we will introduce MongoDB and Iguazio (acquired by McKinsey). We will then list the challenges enterprises are dealing with today when operationalizing gen AI applications. In the end, we’ll provide resources on how to get started.

MongoDB for end-to-end AI data management

MongoDB Atlas, an integrated suite of data services centered around a multi-cloud NoSQL database, enables developers to unify operational, analytical, and AI data services to streamline building AI-enriched applications. MongoDB’s flexible data model enables easy integration with different AI/ML platforms, allowing organizations to adapt to changes in the AI landscape without extensive modifications to the infrastructure. MongoDB meets the requirements of a modern AI and vector data store:

- Operational and Unified: MongoDB’s ability to serve as the operational data store (ODS) that enables financial institutions to efficiently handle large volumes of operational data in real-time and also unifying the AI/vector data, ensuring AI/ML models are executed on the most accurate and up-to-date data. Additionally, compliance requirements and evolving regulations (e.g. 3DS2, ISO20022, PSD2, etc.) necessitate the storage and processing of even larger volumes of data in an operationally timely manner.

- Multi-Modal: Alongside structured data, there's a growing need for semi-structured and unstructured data in gen AI applications. MongoDB's multi-modal document model allows you to handle diverse data types, including documents, network/knowledge graph, geospatial data, and time series data, and to process them. Atlas Vector Search lets you search unstructured data. You can create vector embeddings with ML models and store and index them in Atlas for retrieval augmented generation (RAG), semantic search, recommendation engines, dynamic personalization, and other use cases.

- Flexible: MongoDB’s flexible schema design enables development teams to make application changes to meet changes in data requirements and redeploy application changes in an agile manner.

Iguazio’s AI Platform

Iguazio (acquired by McKinsey) is an AI platform designed to streamline, automate and accelerate the development, deployment, and management of ML and Gen AI applications in production at scale.

Iguazio’s Gen AI-ready architecture includes capabilities for data management, model development, application deployment and LiveOps, or management and monitoring of the applications in production. The platform—now part of QuantumBlack Horizon, McKinsey’s suite of AI development tools— addresses enterprises’ two biggest challenges when advancing from gen AI proofs of concept to live implementations within business environments.

- Scalability: Gen AI operations (gen AI ops) allow for efficient and effective implementation and scaling of gen AI applications.

- Governance: Gen AI guardrails mitigate risk by directing essential monitoring, data privacy, and compliance activities.

By automating and orchestrating AI, Iguazio accelerates time-to-market, lowers operating costs, enables enterprise-grade governance and enhances business profitability.

Iguazio’s platform includes LLM customization capabilities, GPU provisioning to improve utilization and reduce cost, and hybrid deployment options (including multi-cloud or on premises). This positions Iguazio to uniquely answer enterprise needs, even in highly regulated environments, either in a self-serve or managed services model (through QuantumBlack, McKinsey’s AI arm).

Challenges to Operationalizing Gen AI

Building a gen AI or AI application starts with the demo or proof of concept (PoC) phase. However, this is only the first step. To derive business value and ensure the solution is resilient, enterprises need to be able to successfully operationalize and deploy models in production. Doing so comes with its own set of challenges. These include:

- Engineering Challenges - Deploying GenAI applications requires substantial engineering efforts from enterprises. They need to maintain technological consistency throughout the operational pipeline, set up sufficient infrastructure resources, such as an adequate number of GPUs, and ensure the availability of a team equipped with a comprehensive ML and data skillset. Currently, AI development and deployment processes are slow, time-consuming and fraught with friction.

- LLM Risks - When deploying LLMs, enterprises need to reduce privacy risks and comply with ethical AI standards. This includes preventing hallucinations, ensuring outputs are fair and unbiased, filtering out offensive content, protecting intellectual property and aligning with regulatory standards.

- Glue Logic and Standalone Solutions - The AI landscape is vibrant and new solutions are frequently being developed and made available. Autonomously integrating these solutions can create friction and overhead for Ops and data professionals, creating duplicate efforts, brittle architectures, time-consuming processes and lack of consistency.

Iguazio and MongoDB Together: High-Performing and Simplified Gen AI Operationalization

The joint Iguazio-MongoDB solution leverages the innovation of these two leading platforms. The integrated solution allows customers to streamline data processing and storage, ensuring Gen AI applications reach production while eliminating risks, improving performance and enhancing governance.

Iguazio capabilities:

- Structured and unstructured data pipelines for processing, versioning and loading documents.

- Automated flow of data prep, tuning, validating and LLM optimization to specific data efficiently using elastic resources (CPUs, GPUs, etc.).

- Rapid deployment of scalable real-time serving and application pipelines that use LLMs (locally hosted or external) as well as the required data integration and business logic.

- Built-in monitoring for the LLM data, training, model and resources, with automated model re-tuning and RLHF.

- Ready-made gen AI application recipes and components.

- An open solution with support for various frameworks and LLMs and flexible deployment options (any cloud, on-prem).

- Built-in guardrails to eliminate risks and improve accuracy and control.

MongoDB capabilities:

- Unifies operational (eg. customer profile, sessions, transactions, etc) and AI (eg. AI dimensions, features) data stores in a single platform that supports structured and unstructured data, minimizing the cost and effort to manage a technical sprawl that may be necessary to provide a rich dataset for enhancing AI effectiveness.

- Provides a multi-modal JSON-based data platform with a unified API to query data in a multi-modal approach eg. key-value, tabular, geospatial, network graph, time series, etc. that would be necessary as AI and especially GenAI applications are increasingly more multi-modal to become more versatile.

- Serve as a vector store (eg. storing vector embeddings) with vector indexing and search capabilities for performing semantic analysis and also to help improve GenAI experiences (with greater accuracy and mitigating hallucination risks) using a RAG architecture together with the multi-modal operational data typically required by AI applications.

- Adapt to AI application changes quickly leveraging a single unified data platform that provides a flexible schema design to easily make application data changes and integrate with a wide partner ecosystem eg. Iguazio, Confluent, Langchain, LLamaindex, etc to improve time-to-market.

- Deployment of AI applications anywhere, leveraging MongoDB’s flexibility to be deployed self-managed on-premise, in the cloud, or in a SaaS environment on leading hyperscalers, including capabilities for hybrid cloud deployments for institutions not ready to be entirely on the public cloud.

Examples

#1 Building a Smart Customer Care Agent

For example, the joint architecture can be used for creating a smart customer care agent. Iguazio and MongoDB together could:

- Process and analyze raw data (e.g., web pages, PDFs, images) inputted by the customer or the enterprise.

- Process in a batch pipeline for analyzing customer logs and a stream pipeline for live interactions.

- Store results in MongoDB, leveraging its capabilities for managing unstructured data like user age, preferences and historical transactions, together with structured data like account balance and product lists.

The diagram illustrates a production ready GenAI agent application with its four main elements:

- Data pipeline for processing the raw data (eliminating risks, improving quality, encoding, etc.).

- Application pipelines for processing incoming requests, enriching with data from the mongoDB multi-model store, running the agent logic and applying various guardrails and monitoring tasks.

- Development and CI/CD pipelines for fine tuning and validating models, testing the application to detect accuracy risk challenges and automatically deploying the application.

- A monitoring system collecting application and data telemetry to identify resource usage, application performance, risks, etc. The monitoring data can be used to further improve the application performance (through an RLHF integration).

#2 Building a Hyper-Personalized Banking Agent

In this example accompanied by a demo video, we show a banking agent based on a modular Retrieval Augmented Generation (RAG) architecture that helps customers choose the right credit card for them. The agent has access to a MongoDB Atlas data platform with a list of credit cards and a large array of customer details. When a customer chats with the agent, it chooses the best credit card for them, based on the data and additional personal customer information, and can converse with them in the most appropriate manner and tone of voice. The bank can further hyper-personalize the chat to make it more appealing to the client and improve the odds of the conversion, or add guardrails to minimize AI hallucinations and improve interaction accuracy.

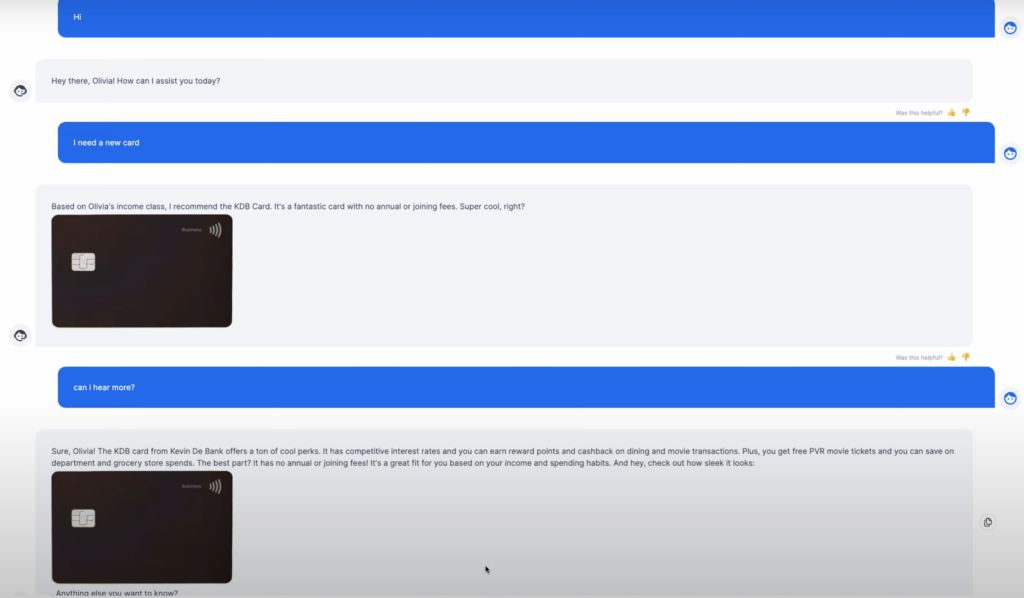

Example Customer #1: Olivia

Olivia is a young client requesting a credit card. The agent looks at her credit card history and annual income and recommends a card with low fees. The tone of the conversation is casual.

When Olivia asks for more data, the agent accesses the card information, while retaining the same youthful and fun tone.

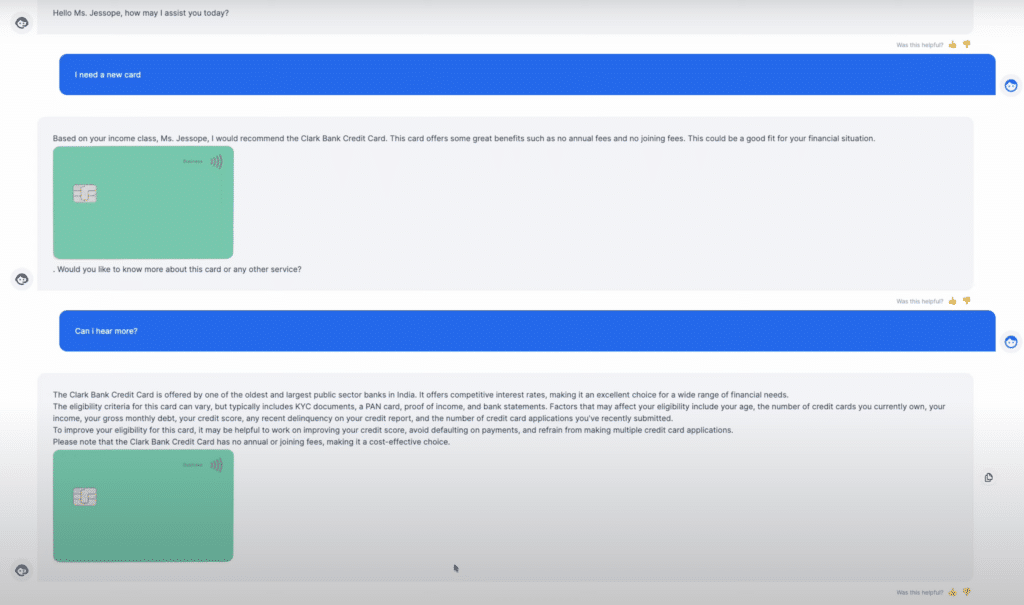

Example Customer #2: Ms. Jessope

The second example involves a more senior woman, but there is no information on her marital status, so the agent calls her “Ms.” in order to avoid an uncomfortable situation. When asking for a new card, the agent accesses her credit card history to choose the best card for her based on her history. The conversation takes place in a respectful tone.

When requesting more information, the response is more informative and detailed than in the case of the younger client and the language remains respectful.

How does this work, under the hood?

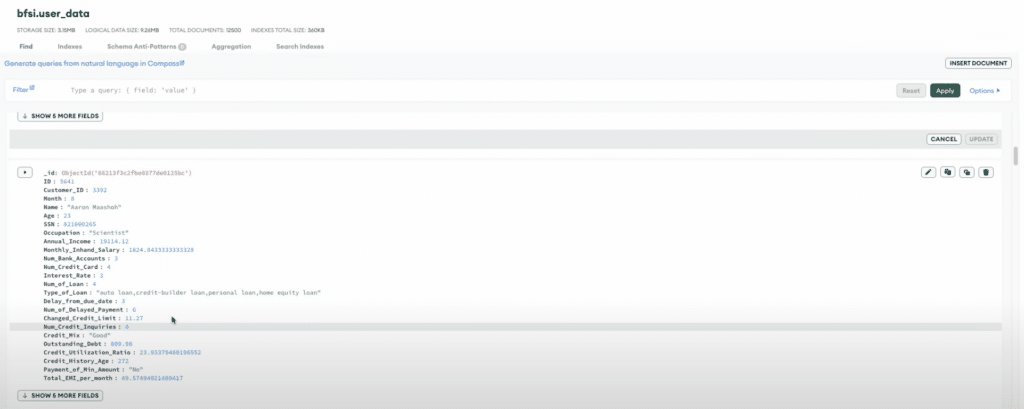

Under the hood, as you can see from the figure below, the tool has access to customer profile data in MongoDB Atlas collection bfsi.user_data and is able to hyper-personalize its response and recommendation based on various aspects of the customer profile, such as demography and financial status.

A RAG process is implemented using the Iguazio AI Platform with MongoDB Atlas data platform. The Atlas Vector Search capabilities were used to find the relevant operational data stored in MongoDB (card name, annual fees, client occupation, interest rates and much more) to augment the contextual data during the interaction with the LLM, to personalize the interaction, and to recommend the best course of action.

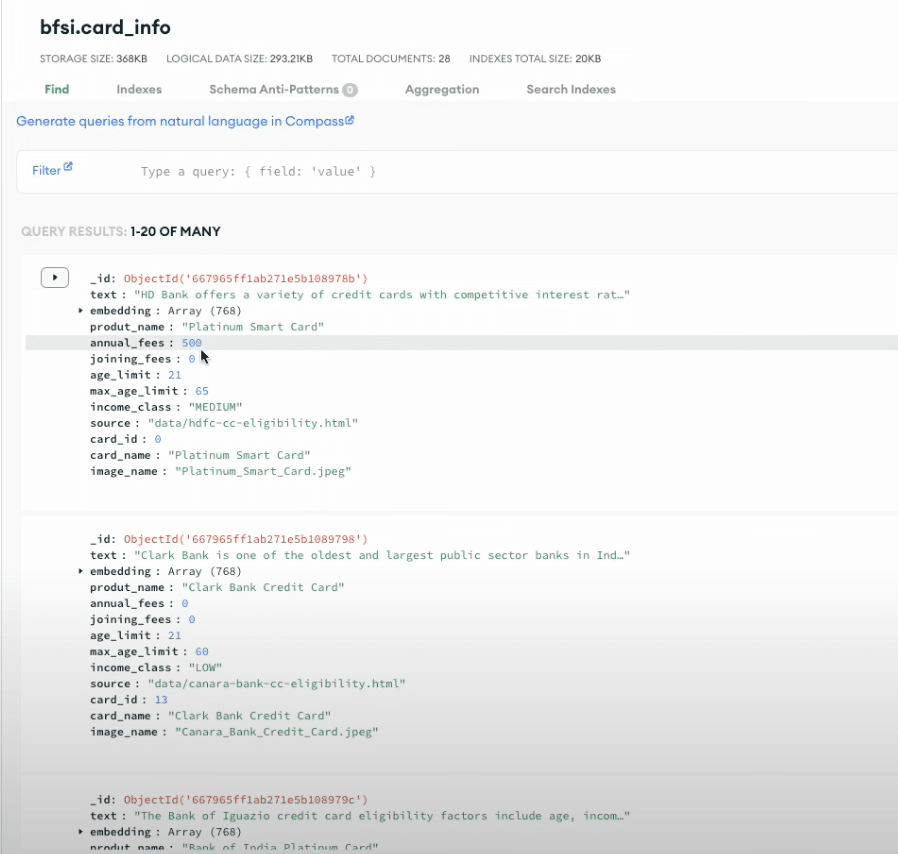

The virtual agent is also able to talk to another agent tool that has a view of the credit card data in bfsi.card_info (such as card name, annual and joining fees, card perks such as cashback and more), to pick a credit card that would best suit the needs of the customer.

To ensure the client gets the best choice of card, a guardrail is added on the data - filtering the cards chosen according to the data gathered by the agent as a built-in component of the agent tool. In addition, another set of guardrails are added to the results stage, thus validating that the card offered really does suit the customer (for example by comparing the card with the optimal ones recommended for the customer’s age range).

This whole process is extremely easy to set up and configure using the Iguazio AI Platform, with seamless integration to MongoDB. The user only needs to create the agent workflow and connect it to MongoDB Atlas and everything works automatically, out of the box.

Lastly, as you can see from the demo above, the agent was able to leverage vector search capabilities of MongoDB Atlas to retrieve, summarize and personalize the messaging on the card information and benefits in the same tone as the user’s.

Benefits of Using Iguazio and MongoDB’s Integrated Solution

- AI / Gen AI Operationalization with minimal engineering: MongoDB and Iguazio provide a single, robust data management system for all flavors of data, with built-in elasticity and scalability. This joint solution enables managing and evolving data from prototype to production to monitoring in a simple and resilient manner without requiring significant engineering efforts.

- Scalability and performance that allow for the handling of large volumes of data and complex data transformations with ease, while maintaining high levels of performance and ensuring reliability and accuracy.

- Security and compliance: Both MongoDB and Iguazio prioritize security and compliance, making them well-suited for regulated industries like finance. Together, we provide clients with robust security features, including encryption, access controls, and compliance monitoring, to ensure that sensitive data remains protected and regulatory requirements are met.

- Hybrid environments: MongoDB and Iguazio are available together on your infrastructure of choice: in the cloud, on-premises or as a hybrid cloud solution. This provides you with the flexibility and customization you need to answer your MLOps/LLMOps and DataOps challenges.

- Unification of data from multiple sources - GenAI applications involve multiple types of data, like geospatial, graphs, tables and vectors. Each data type requires considerations like security, scalability and metadata management. This creates data management complexity. MongoDB and Iguazio unify all data management needs, like logging, auditing and more, in a single solution. This ensures consistency, faster performance and significantly less overhead.

- Diverse use cases and widespread applications: MongoDB's flexible data model accommodates diverse data types, while Iguazio enables customers to develop and deploy AI models at scale. This allows customers to build diverse applications and derive actionable insights from their data so they can drive innovation across various use cases, including fraud detection, risk management and personalized customer experiences.

Register for our joint webinar on July 30th at 9am PT, "Building Scalable Customer-Facing Gen AI Applications Effectively & Responsibly".