MLOps for Generative AI in the Enterprise

Yaron Haviv and Nayur Khan | July 25, 2023

Generative AI has already had a massive impact on business and society, igniting innovation while delivering ROI and real economic value. According to research by QuantumBlack, AI by McKinsey, titled “The economic potential of generative AI”, generative AI use cases have the potential to add $2.6T to $4.4T annually to the global economy. This potential spans more than 60 use cases across all industries. 75% of this impact is focused on marketing and sales, software engineering, customer operations and product and R&D. This is because generative AI has the potential to automate work activities that absorb 60% - 70% of employees’ time today, and especially mundane activities.

In this post, we’ll dive into the potential of generative AI, see how organizations should approach leveraging Large Language Models (LLMs) in live business applications. We’ll also discuss how to do it responsibly by embedding Responsible AI principles into generative AI while taking a human-centered approach.

This article is based on our recent webinar “MLOps for Generative AI” with guests Nayur Khan, partner at QuantumBlack, AI by McKinsey, Mara Pometti, associate design director, McKinsey & Company, and Yaron Haviv, CTO and co-founder of Iguazio. You can watch the webinar here.

The Power and Promise of Generative AI

In less than nine months, generative AI has dominated the tech landscape. Tools like Midjourney, Stable Diffusion, Dall-E 2, ChatGPT, Bard and the AI-powered version of Bing have burst onto the scene, revolutionizing our technological capabilities as consumers and business users.They have also become widely adopted. ChatGPT is reported to have reached 100 million users just two months after its launch. This revolution is like the revolution brought by the iPhone and the Internet.

The flywheel effect of innovation is further driven by open source. For example, there are more than 140 open source LLMs (and growing by the week) democratizing the technology, and making generative AI and its possibilities accessible to a wider engineering audience.

As a result, companies have been rapidly incorporating generative AI into their product offerings as fast as possible. Microsoft is incorporating co-pilots into Windows and Office. Google has incorporated generative AI into many of its technology solutions. Adobe has enhanced a lot of its product suite with generative AI. Online stores such as Walmart and Amazon are also looking to use the technology to assist customers and the functionality of their stores. And the list goes on.

Four Generative AI Use Case Archetypes

There is a wide variety of possible use cases for generative AI, but they are not all being developed and adopted at the same pace. QuantumBlack, has identified four use case archetypes that are being developed first, the four C’s.

1. Concision (Virtual Expert) - This is a virtual expert, helping summarize and extract insights and efficiently retrieving information to assist with problem-solving.

2. Customer Engagement - This is in the form of a personalized customer co-pilot that assists customers and engages with them or of intelligent chatbots for enhanced 24/7 customer support.

3. Coding - The coding archetype can assist with generating code, it can prototype, document, and translate code and it can generate synthetic data.

4. Content Generation - An archetype that generates emails, documents and presentations and creates personalized messaging and next-product-to-buy recommendations.

Three Generative AI Adoption Approaches

There are three broad ways organizations are adopting generative AI today.

1. Taker

- Using the API of commercial or open source LLMs

- Using a prompt to pass additional domain context

Level of effort, complexity, and cost: Low

2. Shaper

- Fine tuning pre-trained LLMs with additional data

- There is less needed to pass domain information within a Prompt

- Enables organizations to add their own proprietary data

Level of effort, complexity, and cost: Medium

3. Maker

- Creating and training a model from scratch

- Enables organizations to take control of the data

Level of effort, complexity, and cost: High

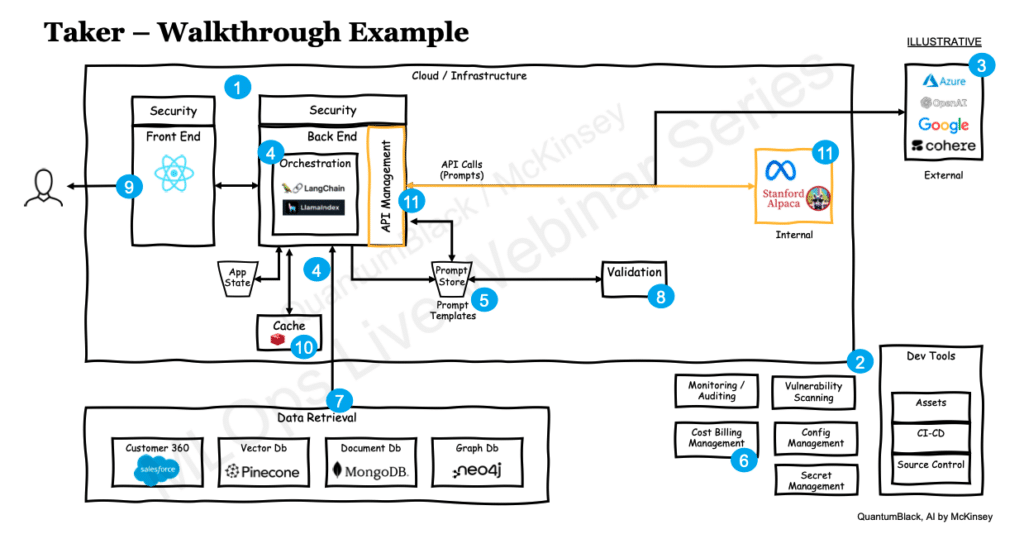

Taker: Walkthrough Example

As an example, let’s dive into the “taker” approach and discover what it requires from organizations, engineering teams and data professionals.

General Infrastructure and Application Considerations

1. Application Architecture

The application should follow good software engineering architectural principles. An example of this could be the 12-factor app guidelines[NK10] . This includes concerns such as:

- Source control

- Configuration management

- Treating backing services as attached resources

2. Environment - An environment that follows proper DevOps and DevSecOps practices, meaning:

- CI/CD

- Infra as code

- Shifting left security

- Vulnerability scanning

- Secret management

- Infrastructure:

- Cloud or on-premises

- Ops considerations:

- Monitoring and auditing capabilities

- Experimenting with parallelizing API calls

- Watching for API rate limits for third party APIs

- Resiliency (handling a high volume of users or non-responsive APIs)

3 - Model selection – there are several general considerations of which model to use – some are tradeoffs – such as:

- Cost

- Accuracy

- Token limits

- Speed

- Privacy

- Proprietary vs. open source

LLMs Ops challenges

4 - Orchestration of API calls

Choose a mechanism to do this - either custom code or a framework

5 – Prompt stores

Decide where to store prompt templates – external from the application since they will change a lot – but also which have version control

6 - Cost management for API calls

Being able to track costs and manage those will become very important – not just for infrastructure and environment, but also for API calls to external LLM providers.

7 – Additional domain context

There will be a need to provide additional domain context to the prompt. This could be from a Vector database – but equally so, there could be many other sources of information (depending on the domain information you need), i.e. a Customer 360, a Graph database, a data warehourse, etc.

8 - Prompt drift validation

You many need some additional validation that continually understands if what is being returned by the LLM is valid.

9 – Prompt guarding

You may need to guard against injection and misuse.

10 - Caching

You may want to consider caching of the questions/answers to improve performance and save costs.

11 – Experiment with other LLMs

Look to use other models by “swapping” or experimenting with other models, like Stanford Alpaca.

Additional considerations among many others:

- Bias

- Fairness

- IP

- Hallucinations

- Regulatory transparency

The Human-Centered Approach for Generative AI

While generative AI facilitates a truly remarkable step change in productivity, it also raises some additional questions. We need to ensure responsible use of generative AI to avoid unwanted consequences that might harm people or erode trust in the information they consume.

One of the steps needed to operationalize responsible AI is designing and building guardrails to keep models in check. To achieve that, organizations should address the connection between the people who come into the recommendations and outputs of the generative AI suggestions and services. This means that organizations should try to capture users' needs, values and norms and embed them into models’ design. This can be achieved by converting users’ requirements into well-defined metrics that help monitor models’ alignment with the expected users’ outcomes and mitigate risk.

Let’s look at a fictional example, demonstrating how an organization can interact and engage with customers through a human-centered approach to generative AI.

Example: How generative AI could power customer engagement

‘Organization X’ has large growth aspirations and wants to engage its customers in meaningful ways. With the knowledge that each customer is unique and has individual behaviors, needs, and engagement preferences, the organization aspires to employ a hyper-personalized engagement solution - with messages (emails, in-app notifications, and SMS) tailored to each individual customer.

Their solution is built by creating a pipeline of ML models and LLMs. Running the solution requires the generation of millions of unique messages constructed based on the varying needs, habits, and histories of each customer.

The solution’s engine uses customer and behavior data that feeds predictive and generative AI models. It identifies individual customer needs and relevant offers. Then, it generates, schedules, and sends sequences of emails, push notifications and SMS marketing campaigns that are 100% tailored to each individual customer’s needs and engagement preferences.

The organization estimates this will cut time to product marketing campaigns and generate revenue uplift from increased cross-sell and upsell.

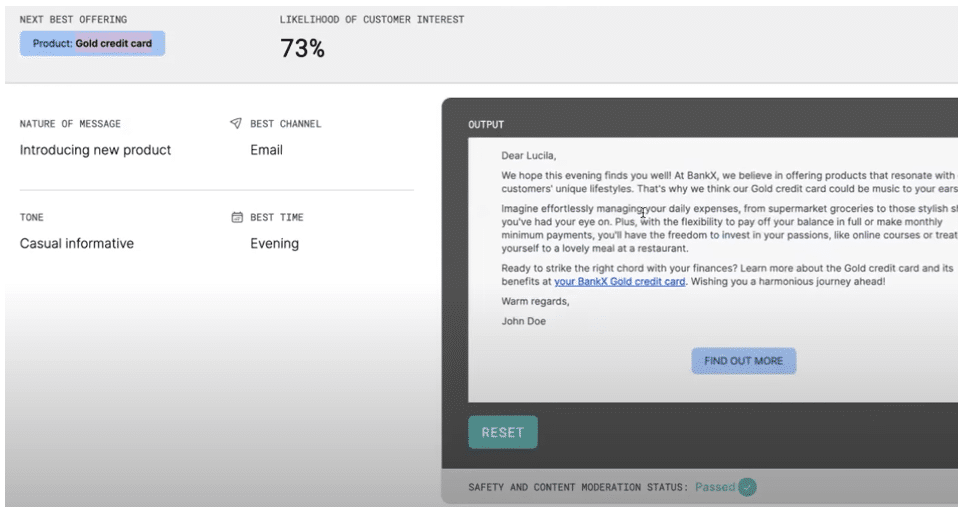

Let's look at an example customer: Lucila, a 54-year old long-term customer.

First, generative AI is used to transform large amounts of customer data into short text summaries that highlight important information about Lucila. This informs customer engagement activities and also builds the foundation for generating automated campaigns tailored to who Lucila is, not just her demographics or purchasing behavior.

Sequences of messages are then queued for Lucila. LLMs generate the messages based on information from the ML models. These models show that Lucila may have a need for a credit card to build her credit history. The best channel for Lucila is email, the best time to engage with her is in the evening. Using this information, an email is generated for Lucila - personalized based on her needs (even including terms like “music to your ears” which are likely to resonate with a singer like Lucila).

Building Guardrails

The design of this future customer engagement solution is based on human-centered practices, developed following research and interviews with customers and subject matter experts from marketing, customer insights, and legal/risk within the organization. By using different human-centered methods ranging from user interviews to design thinking for AI, both business stakeholders and customers’ needs are gathered and then translated into technical requirements, which inform the crafting of prompt engineering. Eventually, they inform which outcomes the models should return.

To ensure Responsible AI is embedded into the development process, state of art guardrails have been implemented. This is done to ensure that each message sent to customers is reliable and trustworthy. Metrics such as toxicity, harmfulness, bias and stereotyping, and hallucinations, are defined and used as benchmark to track content moderation and the trustworthiness of the content generated by the model. In addition, the guardrails are designed by capturing human feedback as a result of a human validation process that backed the improvement of the prompting engineering for generating messages.

Taking a human-centered approach when creating AI systems helps align human needs with an organization’s objectives.

Conclusion

Enterprises have a lot to gain by implementing generative AI. From becoming more efficient and productive to offering new services and capabilities, Generative AI can boost activities across R&D, sales, customer engagement, and more. However, it is essential to ensure Responsible AI guardrails are implemented when adopting generative AI and developing with LLMs. The human-centered approach is a way to responsibly guide AI development and especially align humans and organizational expectations to the AI’s outcome.

You can watch the entire webinar, which also includes information about MLOps in the era of generative AI and LLMs, here.