MLRun v1.7 Launched — Solidifying Generative AI Implementation and LLM Monitoring

Gilad Shaham | November 1, 2024

As the open-source maintainers of MLRun, we’re proud to announce the release of MLRun v1.7. MLRun is an open-source AI orchestration tool that accelerates the deployment of gen AI applications, with features such as LLM monitoring, fine-tuning, data management, guardrails and more. We provide ready-made scenarios that can be easily implemented by teams in organizations.

This new version brings substantial enhancements that address these increasing demands of gen AI deployments, with a particular focus on monitoring LLMs. Additional updates introduce performance optimizations, multi-project management, and more.

MLRun v1.7 is the culmination of months of hard work and collaboration between the Iguazio engineering team, MLRun users and the open-source community. We’ve listened to what our users are saying and have designed this version (and the upcoming ones) to address needs and gaps for managing ML and AI across the lifecycle. With this new version, users ranging from individual contributors to large teams in enterprises will be able to deploy AI applications much faster and with more flexibility than before.

Brief Intro for Newcomers: What is MLRun?

MLRun is an open-source AI orchestration framework for managing ML and generative AI applications across their lifecycle, to accelerate their productization. It automates data preparation, model tuning, customization, validation and optimization of ML models, LLMs and live AI applications over elastic resources. MLRun enables the rapid deployment of scalable real-time serving and application pipelines, while providing built-in observability and flexible deployment options, supporting multi-cloud, hybrid, and on-prem environments.

Why This Release Focuses on Monitoring

Organizations are increasingly relying on LLMs for advanced gen AI applications, ranging from co-pilots to chatbots to call center analysis, and more. However, LLMs operate on complex and evolving data inputs, such as natural language. This makes them more susceptible to unique risks like hallucinations, bias, and model misuse, PII leakage, harmful content, inaccuracy, and more.

Without proper monitoring, these risks can jeopardize business value and user trust. This is an acute problem for organizations, making them wary of deploying gen AI applications because they don't know what the end result will be and what risks it will bring.

LLM monitoring ensures the integrity and operational stability of LLMs in production environments. Effective monitoring can de-risk LLM deployments - ensuring sustained performance, protecting privacy, preventing hallucinations, and more. As a result, reliable LLM monitoring is foundational in any gen AI deployment.

Coupling monitoring with fine-tuning helps detect and mitigate the risks associated with gen AI. Fine-tuning continuously improves the quality of the model through an ongoing feedback loop, to ensure risks are mitigated before they reach production.

In this version, we address these needs, adding more capabilities that allow users to reliably monitor and fine-tune LLMs, so they can feel confident when deploying gen AI applications.

Now without further ado, let’s dive into the new release and what it means for our users.

Key Features in MLRun v1.7: LLM Monitoring and Beyond

Here’s what’s new in MLRun v1.7:

New Monitoring Capabilities

Flexible Monitoring Options: Monitor LLMs in Your Tool of Choice

MLRun v1.7 offers a flexible application infrastructure that allows users to monitor their models using external tools and applications, rather than being limited to built-in monitoring features.

Users can now plug in their own monitoring tools (such as external logging, alerting, or metric systems) or establish connections through APIs and integration points, to track and visualize model performance. For example, MLRun can now integrate with Evidently, an open-source third-party monitoring tool for ML model monitoring.

Such a level of flexibility allows you to integrate MLRun with your preferred monitoring solutions, extending beyond previous built-in capabilities. This is especially helpful when you have specific tools you prefer to use or when you need to track custom metrics that go beyond what the native system offers.

Monitor Unstructured Data Easily

V1.7 offers advanced monitoring capabilities for natural language and unstructured data, making it easier to process and create monitoring metrics for unstructured data. This aligns with the core characteristics of LLMs and provides more accurate insights into their behavior, enhancing performance and applicability.

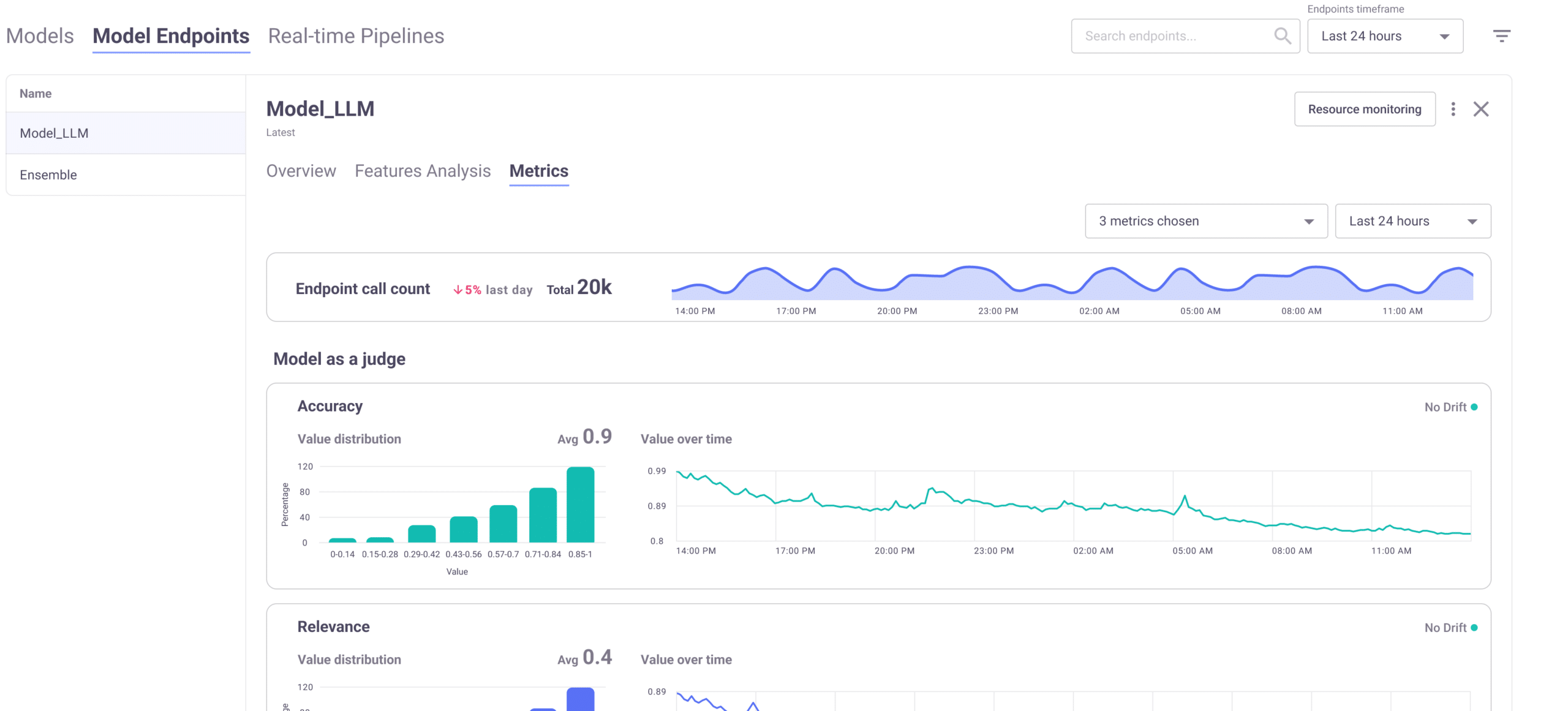

For example, a common way to monitor LLMs is to create another model that acts as a judge, aka LLM-as-a-Judge. This is an additional application that monitors the model, by running the judge model.

Gain Visibility and Control of How LLMs are Performing

MLRun v1.7 introduces a new endpoint metrics UI. This UI allows you to easily view and investigate metrics like accuracy and response times and metrics related to model endpoints. You can also visualize trends and customize the monitoring time frame for deeper visibility.

The new UI provides you with greater control and insight into how models are performing and where improvements need to be made. In the future, these metrics can even be used for identifying risks and the need for guardrails.

Please share your feedback on these capabilities. We will continue to enhance and expand them based on what you share with us.

Demo: Gen AI Banking Chatbot

What do these new monitoring and fine-tuning capabilities look like in a business gen AI application? Here’s a demo of a gen AI banking chatbot that uses MLRun’s new monitoring capabilities to fine-tune it and ensure it answers only banking-related questions.

Additional Capabilities

Deploy Docker Images at Ease

V1.7 also simplifies the deployment of Docker images, making it easier for data scientists and ML engineers to run custom applications. This enhancement significantly reduces the setup time and complexity previously required, opening up new integration possibilities with bespoke UIs and dashboards for deployed models.

Streamline Management and Easily Identify Issues

The new cross-project view in MLRun v1.7 addresses a key pain point for organizations managing multiple projects. You can now monitor active jobs, workflows and other tasks across different projects from a single dashboard. This enhances operational efficiency, improves collaboration for teams managing complex AI environments and helps quickly identify issues, such as failed jobs or bottlenecks.

Community-Driven Innovations and Performance Boosts

In addition to the list above, MLRun v1.7 also incorporates numerous features and optimizations based on user feedback. In MLRun v1.7, you can look forward to performance improvements in UI responsiveness, enhanced handling of large data volumes, and a range of fixes aimed at improving overall usability.

Looking Ahead: What’s Next for MLRun

This version is an important milestone in the MLRun journey, but it is not the end of the tool’s evolution.

The MLRun team is committed to continuing this momentum in future releases, with plans to expand the LLM monitoring capabilities and introduce even greater flexibility for gen AI applicationmodel management and deployment. Version 1.8 and beyond will focus on deeper integrations, enhanced visualization tools, and more user-requested features. Stay tuned!

We’re looking forward to hearing your feedback about MLRun and your future needs for the upcoming version. So we encourage you to share your insights and requirements in the community.

For a detailed look at the full list of updates, improvements, and to access the new tutorials, visit the MLRun Changelog.